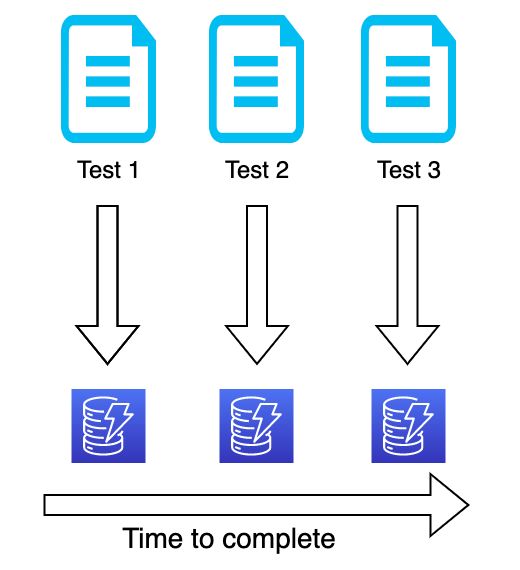

As part of my previous blog, we understood how can we run integration tests in parallel. Now, as part of this blog, I would like to share my approach when I am trying to run integration tests in parallel with dynamodb.

Most of my projects I used DynamoDBMapper to interact with dynamodb as DynamoDBMapper hides low level details and provide some fluent APIs. Like me if you have also used DynamoDBMapper to interact with dynamodb, you might have observed we need to use @DynamoDBTable annotation to hard code the table name or we need to pass the table name to each and every method call. Personally I like annotation approach. Now, when try to run tests parallelly, it becomes tricky to modify table name as it’s hardcoded in Java codebase. That is when I started looking deeper in AWS SDK doc and found interesting concept of TableNameOverride config. So, what does it do? Basically, depends on our config it’ll use different table name when making method calls.

DynamoDBMapperConfig.builder()

.withTableNameOverride(

DynamoDBMapperConfig.TableNameOverride.withTableNamePrefix("random_"))

.build();

So, with the above config when we make use of dynamoDBMapper.load(PersonDocument.class, id), internally it’ll figure out the table name from PersonDocument (let’s assume persons) and prefix it with random_ and use the updated value for all of it’s process. This way we are able to update the table name without much hassle. But it becomes tricky for code base when we try to use batch operations like batchLoad as this methods returns result as Map<TableName, List of result>. As I mentioned before, due to override, DynamoDBMapper uses updated name for all its process, it’ll provide the result also with updated table name. For example the result will become something like following and our code stops working —

{

"random_persons": [

{

"id": "1",

"name": "John"

}

]

}So, we need to do some tweaks so that when the mapper is returning the result it’ll make use of original table name instead of updated random name. The simplest option is to create a custom dynamoDB mapper by updating the batch operations of actual dynamoDB mapper —

@Component

public static class TableNameOverriddenDynamoDbMapper extends DynamoDBMapper {

// Add constructors ....

@Override

public Map<String, List<Object>> batchLoad(

Map<Class<?>, List<KeyPair>> itemsToGet, DynamoDBMapperConfig config) {

final String tableNamePrefix = config.getTableNameOverride().getTableNamePrefix();

return super.batchLoad(itemsToGet, config).entrySet().stream()

.map(

entry ->

new AbstractMap.SimpleImmutableEntry<>(

entry.getKey().replace(tableNamePrefix, ""), entry.getValue()))

.collect(

Collectors.toMap(

AbstractMap.SimpleImmutableEntry::getKey,

AbstractMap.SimpleImmutableEntry::getValue));

}

}

Here I have only overridden batchLoad but you can use the same concept for other batch operations as well.

So, now if we put everything together our new tests codebase will look something like follows —

// LocalDynamoConfig.java

package com.suman.config;

import com.amazonaws.services.dynamodbv2.AmazonDynamoDBAsync;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBMapper;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBMapperConfig;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBMapperConfig.TableNameOverride;

import com.amazonaws.services.dynamodbv2.datamodeling.KeyPair;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.test.context.TestConfiguration;

import org.springframework.stereotype.Component;

import java.util.AbstractMap;

import java.util.List;

import java.util.Map;

import java.util.stream.Collectors;

@TestConfiguration

public class LocalDynamoConfig {

@Component

public static class TableNameOverriddenDynamoDbMapper extends DynamoDBMapper {

public TableNameOverriddenDynamoDbMapper(

AmazonDynamoDBAsync dynamoDBClient,

@Value("${app.config.random.prefix}") String tableNamePrefix) {

super(dynamoDBClient, getDynamoDBMapperConfig(tableNamePrefix));

}

private static DynamoDBMapperConfig getDynamoDBMapperConfig(String tableNamePrefix) {

return DynamoDBMapperConfig.builder()

.withTableNameOverride(

TableNameOverride.withTableNamePrefix(tableNamePrefix))

.build();

}

@Override

public Map<String, List<Object>> batchLoad(

Map<Class<?>, List<KeyPair>> itemsToGet, DynamoDBMapperConfig config) {

final String tableNamePrefix = config.getTableNameOverride().getTableNamePrefix();

return super.batchLoad(itemsToGet, config).entrySet().stream()

.map(

entry ->

new AbstractMap.SimpleImmutableEntry<>(

entry.getKey().replace(tableNamePrefix, ""), entry.getValue()))

.collect(

Collectors.toMap(

AbstractMap.SimpleImmutableEntry::getKey,

AbstractMap.SimpleImmutableEntry::getValue));

}

}

}

// CustomActiveProfilesResolver.java

// Code snippet is from https://medium.com/@suman.maity112/reduce-build-time-by-running-tests-in-parallel-ec0d26f068a6

package com.suman;

import java.util.Arrays;

import java.util.stream.Stream;

import org.springframework.test.context.support.DefaultActiveProfilesResolver;

import org.apache.commons.lang3.RandomStringUtils;

public class CustomActiveProfilesResolver extends DefaultActiveProfilesResolver {

@Override

public String[] resolve(Class<?> testClass) {

return Stream.concat(

Stream.of(RandomStringUtils.randomAlphabetic(5).toLowerCase()),

Arrays.stream(super.resolve(testClass)))

.toArray(String[]::new);

}

}

// ConfigRandomizer.java

package com.suman;

import lombok.extern.slf4j.Slf4j;

import org.springframework.boot.test.util.TestPropertyValues;

import org.springframework.context.ApplicationContextInitializer;

import org.springframework.context.ConfigurableApplicationContext;

import org.springframework.core.env.ConfigurableEnvironment;

import org.testcontainers.shaded.org.apache.commons.lang3.RandomStringUtils;

import java.util.AbstractMap;

import java.util.List;

import java.util.Map;

import java.util.stream.Collectors;

@Slf4j

public class ConfigRandomizer

implements ApplicationContextInitializer<ConfigurableApplicationContext> {

private static final List<String> CONFIG_TO_BE_RANDOMIZED =

List.of("app.config.random.prefix");

@Override

public void initialize(ConfigurableApplicationContext applicationContext) {

final ConfigurableEnvironment environment = applicationContext.getEnvironment();

final String prefix = RandomStringUtils.randomAlphabetic(5).toLowerCase();

log.info("===================== Using {} as prefix", prefix);

final Map<String, String> updateConfig =

CONFIG_TO_BE_RANDOMIZED.stream()

.map(

propertyName ->

new AbstractMap.SimpleImmutableEntry<>(

propertyName, "%s_%s".formatted(prefix, environment.getProperty(propertyName, ""))))

.collect(

Collectors.toMap(

AbstractMap.SimpleImmutableEntry::getKey,

AbstractMap.SimpleImmutableEntry::getValue));

TestPropertyValues.of(updateConfig).applyTo(applicationContext.getEnvironment());

}

}

// HelloWorldIntegrationTest.java

package com.suman.hello;

import static org.springframework.boot.test.context.SpringBootTest.WebEnvironment.RANDOM_PORT;

import org.junit.jupiter.api.Test;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.annotation.DirtiesContext;

import org.springframework.test.context.ActiveProfiles;

import org.springframework.test.context.ContextConfiguration;

import org.springframework.beans.factory.annotation.Autowired;

import com.amazonaws.services.dynamodbv2.datamodeling.DynamoDBMapper;

import com.suman.CustomActiveProfilesResolver;

import com.suman.ConfigRandomizer;

import com.suman.config.LocalDynamoConfig;

@DirtiesContext

@ActiveProfiles(value = "test", resolver = CustomActiveProfilesResolver.class)

@ContextConfiguration(initializers = ConfigRandomizer.class)

@SpringBootTest(webEnvironment = RANDOM_PORT, classes = {LocalDynamoConfig.class})

class HelloWorldIntegrationTest {

@Autowired private DynamoDBMapper dynamoDBMapper;

@BeforeEach

void setUp() {

// ... create resources if needed

}

@AfterEach

void cleanUp() {

// ... cleanup resources if needed

}

@Test

void shouldAbc(){

// ...

}

@Test

void shouldAbcd(){

// ...

}

}

Every other code snippet, excluding LocalDynamoConfig, was borrowed from the preceding blog.

These are the settings/tweaks required to utilize DynamoDBMapper and run parallel integration tests without altering any source code.

I hope these pointers would clarify how to set up DynamoDBMapper for parallel integration tests. Feel free to provide your feedback and opinions 😄.

Originally posted on medium.com.